Martin, K. Working Paper. Privacy Myths and Mistakes: Paradoxes, tradeoffs, and the omnipotent consumer.

The goal of this article is to dispel myths permeating privacy research, practice, and policy. These myths about privacy in the market – including that there is a tradeoff between functionality and privacy, that people don’t care about privacy, and that people behave according to the privacy paradox – provide a distraction from holding firms accountable for the many ways they can (and do) violate privacy.

The myths emanate from outdated assumptions we make about privacy and markets, such as defining privacy as concealment and centering the consumer as the privacy arbiter. These mistaken assumptions serve to narrow industry’s role and obligation to respect consumer privacy. Where privacy as concealment narrows the scope of what firms should worry about, the focus on the consumer (rather than the company) as the privacy arbiter delegates responsibility to individuals rather than firms when privacy is not respected.

However, by avoiding these mistakes and recognizing that individuals have always had legitimate privacy interests in information that is disclosed and that firms are responsible for whether and how they respect those privacy interests – I illuminate a path for future research into privacy practice and policy.

Martin, K., Nissenbaum, H., & Shmatikov, V. 2025. No Cookies for You!: Evaluating the Promises of Big Tech’s ‘Privacy-Enhancing’ Techniques. Georgetown Law Technology Review 9:1. (appendix).

We examine three common principles underlying a slew of ’privacy-enhancing’ techniques recently deployed or scheduled for deployment by big tech companies: (1) limiting access to personal data by third-parties, (2) using inferences and minimizing use and retention of raw data, and (3) ensuring personal data never leaving users’ device. Our Article challenges these principles, but not on the grounds that techniques offered to implement them fail to achieve their stated goals. Instead, we argue that the principles themselves fall short when the privacy-enhancing technique does not address privacy-violating behavior. Through philosophical analysis and technical scrutiny, we reveal the misalignment between the principles and a sound conception of privacy. We reinforce our findings empirically with a series of factorial vignette user studies, which demonstrate a surprising gap between the principles and users’ actual privacy expectations. The most general conclusion that can be derived from our findings is that any effort to create successful privacy-enhancing systems must start with the explicit adoption of a meaningful conception of privacy.

Martin, K. 2024. Privacy, Platforms, and the Honeypot Problem. Harvard Journal of Law and Technology.

In this essay, I argue that antitrust scholars and courts have taken privacy shortcuts to mistakenly frame users as having no privacy interests in data collected by platforms. These privacy shortcuts, such as privacy as concealment or as protection from intrusion, justify platforms creating an attractive customer-facing platform to lure in customers and later exploit user data in a secondary platform or business. I call this the Honeypot Problem. As such, these privacy shortcuts hide an important mechanism used by platforms to abuse market power and justify the growth of honeypot platforms that act like a lure for consumers to collect their data only to later exploit that data in a different business.

I offer a positive account of privacy on platforms to show how the problems introduced by the privacy shortcuts can be resolved by understanding the norms of data governance for a given platform. Privacy on platforms is defined by the norms of appropriate flow — what data is collected, the conditions under which information is collected, with whom the data is shared, and whether data is used in furtherance of the context of the platform. Norms of privacy and data governance – what and how data is collected, shared, and used – will differ when on LinkedIn versus Tinder, since the platforms perform different functions and have different contextual goals, purposes, values, and actors. However, privacy and data governance norms are a mechanism by which these platforms differentiate and compete in a competitive market and abuse market power in less competitive markets.

Martin, K. 2022. Manipulation, Privacy, and Choice. North Carolina Journal of Law & Technology.

This Article examines targeted manipulation as the covert leveraging of a specific target’s vulnerabilities to steer their decisions to the manipulator’s interests. This Article positions online targeted manipulation as undermining the core economic assumptions of authentic choice in the market. Then, the Article explores how important choice is to markets and economics, how firms gained positions of power to exploit vulnerabilities and weaknesses of individuals without the requisite safeguards in place, and how to govern firms that are in the position to manipulate. The power to manipulate is the power to undermine choice in the market. As such, firms in the position to manipulate threaten the autonomy of individuals, diminish the efficiency of transactions, and undermine the legitimacy of markets.

Martin, K. 2020. Breaking the Privacy Paradox: The Value of Privacy and Associated Duty of Firms. Business Ethics Quarterly. Appendix

I specifically tackle the supposed ‘privacy paradox’ or perceived disconnect between individuals’ stated privacy expectations, as captured in surveys and consumer market behavior in going online: individuals purport to value privacy yet still disclose information to firms. The privacy paradox is important for business ethics because the narrative of the privacy paradox defines the scope of corporate responsibility as quite narrow: firms have little to no responsibility to identify or respect privacy expectations if consumers are framed as relinquishing privacy online. However, contrary to the privacy paradox, I show consumers retain strong privacy expectations even after disclosing information in “Breaking the Privacy Paradox: The Value of Privacy and Associated Duty of Firms.” Privacy violations are valued akin to security violations in creating distrust in firms and in consumer (un)willingness to engage with firms. Where firms currently are framed as having, at most, a duty to not interfere with consumers choosing to relinquish privacy, this paper broadens the scope of corporate responsibility to suggest firms may have a positive obligation to identify reasonable expectations of privacy of individuals. In addition, research perpetuating the privacy paradox, through the mistaken framing of disclosure as proof of anti-privacy behavior, gives license to firms to act contrary to the interests of consumers.

Martin, K and Helen Nissenbaum. 2020. What is it about location? Berkeley Technology Law Journal, 35(1).

In our third paper on privacy in public, Helen Nissenbaum and I specifically focus on the collection of location data in public spaces via different mechanisms, such as phones, fitbits, CCTV, apps, etc. The paper, “What is it about location?” reports on a set of empirical studies that reveal how people think about location data, how these conceptions relate to expectations of privacy, and, consequently, what this might mean for law, regulation, and technology design. The results show that drawing inferences about an individuals’ location and identifying the ‘place’ where they are, significantly affect how appropriate people judge respective practices to be. This means that tracking an individuals’ place – home, work, shopping – is seen to violate privacy, even without directly collecting GPS data. In general, individuals have strong expectations of privacy – particular when data aggregators and data brokers are involved.

Martin, K. 2019. Privacy, Trust, and Governance. Washington University Law Review 96(6).

Currently, we (including myself!) frame individuals online as in a series of exchanges with specific firms, and privacy, accordingly, is governed to ensure trust within those relationships. However, trusting a single firm is not enough; individuals must trust the hidden online data market in general. I explore how privacy governance should also be framed as protecting a larger market to ensure consumers trust being online in “Privacy, Trust, and Governance: Or are privacy violations akin to insider trading?” I found that respondents judge uses of consumer data more appropriate if judged more within a generalized exchange (i.e., for the good of the community such as academic research) or within a reciprocal exchange (e.g., product search results) or both (credit security). However, most uses of data are deemed privacy violations and decrease institutional trust online. Also, institutional trust online impacts a consumer’s willingness to engage with a specific online partner in a trust game experiment. Interestingly, given the focus on privacy notices in the US, using privacy notices is the least effective governance mechanism whereas being subject to an audit was as effective as using anonymized data in improving consumer trust. The findings have implications for public policy and practice. Uses of information online need not only be justified in a simple quid-pro-quo exchange with the consumer but could also be justified as appropriate for the online context within a generalized exchange. Second, if privacy violations hurt not only interpersonal consumer trust in a firm but also institutional trust online, then privacy would be governed similar to insider trading, fraud, or bribery—to protect the integrity of the market. Punishment for privacy violations would be set to ensure bad behavior is curtailed and institutional trust is maintained rather than to remediate a specific harm to an individual.

Martin, K. 2018. The Penalty for Privacy Violations: How Privacy Violations Impact Trust Online. Journal of Business Research [IF 3.354] 82: 103-116.

A consistent theme in my work on privacy is the role of trust – e.g., as diminished with the use of privacy notices and as important to understanding privacy expectations in the mobile space. I extend this work to examine privacy and trust generally in “The Penalty for Privacy Violations: How Privacy Violations Impact Trust Online,” and measure the relative importance of violating privacy expectations to consumers’ trust in a website. The findings suggest consumers find violations of privacy expectations, specifically the secondary uses of information, to diminish trust in a website. Firms that violate privacy expectations are penalized twice: violations of privacy (1) impact trust directly and (2) diminish the importance of trust factors such as integrity and ability on trust. In addition, consumers with greater technology savvy place greater importance on privacy factors than respondents with less knowledge. Violations of privacy may place firms in a downward trust spiral by decreasing not only trust in the website but also the impact of possible mechanisms to rebuild trust such as a firm’s integrity and ability.

Martin, K & Helen Nissenbaum. 2017. Privacy Interests in Public Records: An Empirical Investigation. Harvard Journal of Law and Technology.

The focus of the study is on data that would be deemed public according to traditional approaches. The salient subcategory that we examine is data held in government public records, by definition deemed public and by parallel assumption deemed not worthy of privacy protection. Further the study, reports on a second series of studies in which we ask subjects to respond to questions about information deemed public, consequently deserving less privacy protection, or possibly not implicating privacy at all. Our work reveals normative judgments on the appropriate use and access of personal data in a broad array of public records, such as those of births, deaths, and marriages, as well as documented transactions with offices and government agencies.

Martin, K. 2017. Do Privacy Notices Matter? Comparing the impact of violating formal privacy notices and informal privacy norms on consumer trust online. Journal of Legal Studies.

While privacy online is governed through formal privacy notices, little is known about the impact of privacy notices on trust online. I use a factorial vignette study to examine how the introduction of formal privacy governance (privacy notices) impacts consumer trust and compare the importance of respecting informal privacy norms versus formal privacy notices on consumer trust. The results show that invoking formal privacy notices decreases trust in a website.

Martin, K & Helen Nissenbaum. 2017. Measuring Privacy: An empirical examination of common privacy measures in context. Columbia Science and Technology Law Review.

Our studies aim to reveal systematic variation lurking beneath seemingly uniform responses in privacy surveys. To do so, we revisited two well-known privacy measurements that have shaped public discourse as well as policies and practices in their respective periods of greatest impact.

Shilton, K. & Martin, K. 2016. Mobile Privacy Expectations in Context. The Information Society.

This paper reports on survey findings that identify contextual factors of importance in the mobile data ecosystem. Our survey demonstrated that overall, very common activities of mobile application companies such as harvesting and reusing location data, accelerometer readings, demographic data, contacts, keywords, name, images and friends do not meet users’ privacy expectations. But these differences are modulated by both information type and social context.

Martin, K. 2015. Privacy Notices as Tabula Rasa- How consumers project expectations on privacy notices. Journal of Public Policy and Marketing.

Recent privacy scholarship has focused on the failure of adequate notice and consumer choice as a tool to address consumers’ privacy expectations online. However, a direct examination of how complying with privacy notice is related to meeting privacy expectations online has not been performed. This paper reports the findings of an empirical examination of how judgments about privacy notices are related to privacy expectations. A factorial vignette study describing online consumer tracking asked respondents to rate the degree online scenarios met consumers’ privacy expectations or complied with a privacy notice. The results suggest respondents perceived the privacy notice as offering greater protections than the actual privacy notice. Perhaps most problematic, respondents projected the important factors of their privacy expectations onto the privacy notice. In other words, privacy notices became a tabula rasa for users’ privacy expectations.

Martin, K. 2015. Understanding Privacy Online: Development of a Social Contract Approach to Privacy. Journal of Business Ethics.

Recent scholarship in philosophy, law, and information systems suggests that respecting privacy entails understanding the implicit privacy norms about what, why, and to whom information is shared within specific relationships. These social contracts are important to understand if firms are to adequately manage the privacy expectations of stakeholders. ...

Martin, K. & Shilton, K. Forthcoming. Why Experience Matters to Privacy- How Context-Based Experience Moderates Consumer Privacy Expectations for Mobile Applications. Journal of the Association for Information Science and Technology, 67(8): 1871-1882.

Analysis of the data suggests that experience using mobile applications did moderate the effect of individual preferences and contextual factors on privacy judgments. Experience changed the equation respondents used to assess whether data collection and use scenarios met their privacy expectations. Discovering the bridge between 2 dominant theoretical models enables future privacy research to consider both personal and contextual variables by taking differences in experience into account.

Martin, K. 2015. Measuring Privacy Expectations Online. NSF Study results.

A growing body of theoretical scholarship has focused on privacy as contextually defined, thereby examining privacy expectations within a specific set of relationships or situations. However, research and regulations focus on individuals’ dispositions as the primary driver of differences across privacy expectations. The goal of this paper is to empirically compare the role of contextual factors versus individual dispositions in user privacy expectations. ...

Glac, K., Elm, D., & Martin, K. 2014. Areas of Privacy in Facebook: Expectations and value. Business and Professional Ethics Journal 33 (2/3), 147-176.

Privacy issues surrounding the use of social media sites have been ap- parent over the past ten years. Use of such sites, particularly Facebook, has been increasing and recently business organizations have begun using Facebook as a means of connecting with potential customers or clients. ...

Martin, K. 2013. Transaction costs, privacy, and trust: The laudable goals and ultimate failure of notice and choice to respect privacy online. First Monday 18 (12). Lead Article.

The goal of this paper is to outline the laudable goals and ultimate failure of notice and choice to respect privacy online and suggest an alternative framework to manage and research privacy. ...

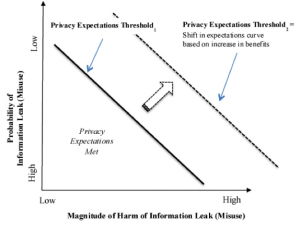

Figure 2 illustrates the theoretical relationship between harms and benefits governing privacy in practice. Privacy scholarship suggests that individuals who develop privacy rules consider the magnitude of the harm, the probability of the harm being realized, and the expected benefits of sharing information.

Martin, K. 2012. Information technology and privacy: Conceptual muddles or privacy vacuums? Ethics and information technology 14 (4), 267-284.

Firms regularly require users, customers, and employees to shift existing relationships onto new information technology, yet little is known as about how technology impacts established privacy expectations and norms. This paper examines whether and how privacy expectations change based on the technological platform of an information exchange. ...

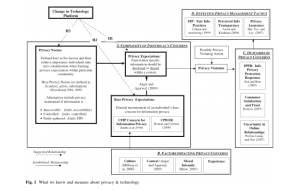

A general model of privacy expectations is developed by leveraging two areas of privacy scholarship focusing on (1) individual-specific base privacy concerns and (2) con- textually-defined privacy norms. Figure 1 depicts how base privacy concerns, contextually defined privacy norms, and privacy expectations fit within the larger picture of privacy research.

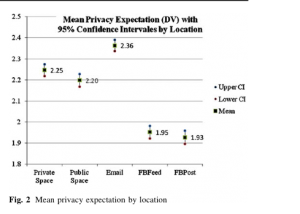

Figure 2 shows both Facebook scenarios—a Facebook Post and a Facebook Feed—as having a lower mean privacy expectation; respondents were more apt to rate information OK to Share on Facebook than other locations….While locating the exchange on Facebook—either as a Facebook Post or as a Facebook Feed—increases the probability that the information would be expected to be shared, locating the exchange on email does not follow this trend. The cumulative probability of email emulates the distribution for a verbally exchange in a private room.

A growing body of theory has focused on privacy as being contextually defined, where individuals have highly particularized judgments about the appropriateness of what, why, how, and to whom information flows within a specific context. Such a social contract understanding of privacy could produce more practical guidance for organizations and managers who have employees, users, and future customers all with possibly different conceptions of privacy across contexts. ...

Martin, K. 2011. TMI (Too Much Information)- The Role of Friction and Familiarity in Disclosing Information. Business and Professional Ethics Journal 30 (1/2), 1-32.

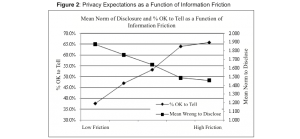

The goal of this study is to identify the norms of disclosing information: what do individuals take into consideration when disclosing information? The findings show that an individual’s relationship to the recipient (familiarity) and the degree to which the information is protected from being easily transferred to others (friction) positively influence the odds that disclosure is judged to be within privacy expectations. ...

For high friction scenarios, the disclosure of information was more likely to be judged within privacy expectations and considered OK to Tell compared to low friction scenarios, where the disclosure of information was deemed more Wrong to as illustrated in Figure 2.

Martin, K. 2010. Privacy Revisited- From Lady Godiva’s Peeping Tom to Facebook’s Beacon Program in D. Palmer (Ed.) Ethical Issues in E-Business: Models and Frameworks. IGI Global Publishers.

The underlying concept of privacy has not changed for centuries, but our approach to acknowledging privacy in our transactions, exchanges, and relationships must be revisited as our technological environment – what we can do with information – has evolved. The goal of this chapter is to focus on the debate over the definition of privacy as it is required for other debates and has direct implications to how we recognize, test, and justify privacy in scholarship and practice....

Martin, K. & Freeman, R.E. 2003. Some Problems with Employee Monitoring. Journal of Business Ethics 43:353-361.

Employee monitoring has raised concerns from all areas of society– business organizations, employee interest groups, privacy advocates, civil libertarians, lawyers, professional ethicists, and every combination possible. Each advocate has its own rationale for or against employee monitoring whether it be economic, legal, or ethical....